Browser Automation Tools for Web Scraping are developed to make testing activities including web scraping easier and more accurate. To some people, web scraping may sound dubious, but a lot of organizations actually do it for several legitimate purposes. Some of the reasons that you may want to scrape the web are:

Gather contact information: Thousands of websites contain phone numbers and email addresses, and a web scraping tool can help you extract all the contact details of suppliers, jobseekers, or any people that are helpful to your organization.

Market research: With the help of a web scraping tool, you can extract data related to your industry from competitors, market research organizations, and data analytics sites. Market research will help you know the latest trends in your industry, and even where it is going in the near future, making it a crucial activity for your business to stay ahead.

Monitor market prices: Whether you own an e-commerce business or a regular online shopper, web scraping can help you monitor the prices of commodities across hundreds of online stores.

There are more uses of web scraping, and even recruiters looking for employees can benefit from a browser automation tool since it can help them gather a pool of qualified candidates for a certain job position. Jobseekers, on the other hand, can likewise scrape the web for employers looking to hire people with a certain skill set.

In addition, if you are going somewhere with a limited Internet connection and you know you’ll be needing something to read, you may also scrape the web to download pages of a particular topic that you can read while offline.

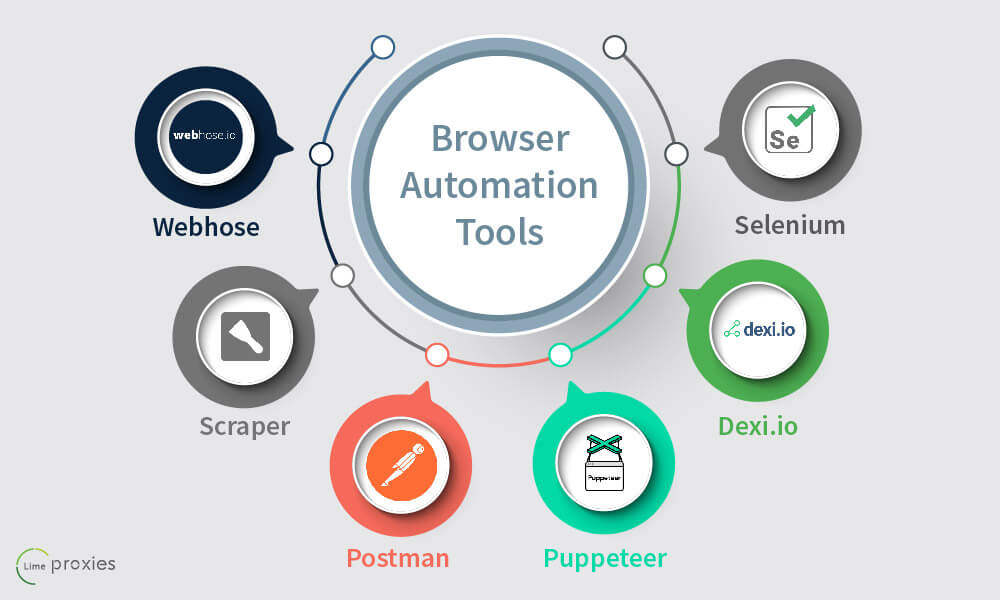

BROWSER AUTOMATION TOOLS

Web scraping can be done manually, but it can be quite tedious, not to mention prone to mistakes since there is a tendency to overlook an important variable. Most people use web automation tool when they scrape the web. Here are some browser automation tools you can use:

SELENIUM

Selenium is an open-source test automation tool that is widely used by programmers and testers. As a matter of fact, Katalon’s survey on Test Automation Challenges revealed that 84% of testers are using or have used Selenium. The tool requires users to have an advanced level of programming skills since they need to develop libraries and frameworks so they can start with the automation.

While this can be a turn off to users who only know basic programming, this also means a high level of customization. Users can create their own scripts and they can use different programming languages such as Groovy, Python, Java, C#, Ruby, Perl and PHP. These scripts can also run on different browsers including Headless browsers, and on most operating systems.

DEXI.IO

Dexi.io which was called Cloudscrape before, is a web browser-based tool and therefore does not need to be downloaded. All the data you collect can be saved on Google Drive or on any cloud platform. There is also an export functionality so you can download the collected data as JSON or CSV. The web scraper will archive your data after two weeks of storage.

Dexi.io allows you to hide your real identity by using proxy servers, which is advisable regardless of the browser automation tool you are using. The web scraper offers a 20-hour free trial, and after that, it will cost you $29 a month to continue using the tool.

PUPPETEER

Puppeteer is a relatively new web scraper tool released in 2018 by Google to work on headless Chromium or Chrome. Aside from crawling Single Page Automation (SPA), Puppeteer also enables users to do most of the things that they normally do on a regular browser such as:

- Chrome extensions testing.

- Create and run automated testing, including UI testing, in real time.

- Form submission automation.

- Record runtime performance of your website.

- Puppeteer, although developed by Chrome’s development team, is also open-source.

WEBHOSE

Webhose is that it uses an exclusive and unique browser-based technology in crawling data from thousands of websites. Like most web scraping tools, Webhose can be tapped to aid in financial analysis, market research, AI learning, and web monitoring. It can also be used in cybersecurity threat intelligence and data breach detection.

Webhose users can extract data in over 250 languages which can be exported as JSON, XML, or other formats. The company behind the web scraper offers a free trial with 1,000 monthly requests for data from news, reviews, blogs, and online discussions.

POSTMAN

Postman is an automation tool that can be installed either as a desktop application (for Linux, Mac, and Windows operating systems) or as a browser extension. It is widely used for API testing by both testers and developers since it is also a development environment.

For individuals and smaller teams, the tool is offered free of charge. This free plan includes 1,000 calls a month. A pro account suitable for team projects with 50 users is priced at $8 per user, per month and already includes premium features like professional collaboration. The whole team is allowed a maximum of 10,000 calls a month.

SCRAPER

Scraper is a Chrome extension that works as a web scraper tool. It is primarily designed for beginners, although a lot of advanced users are also using the tool to copy data and save them in spreadsheets. Although it only allows for a limited extraction of data, it is still quite handy when doing online research. You can export collected data to Google Spreadsheets.

Scraper is indeed a simple data mining browser extension as it automatically generates small XPaths when defining the URLs for crawling.

Check also our SEO techniques.

WHAT ARE HEADLESS BROWSERS?

For most, if not all, of these automation tools, a headless browser is necessary to make the process look more natural and organic. For this reason, using a headless browser also makes web scraping a lot more successful. It is termed as “headless” because it does not have the graphical user interface of a regular web browser. Instead, users will have to perform actions using a command line interface.

Also Read : How to scrape a website without getting blocked or misled?

MOST POPULAR HEADLESS BROWSERS USED IN AUTOMATION TESTING AND WEB SCRAPING:

- Headless Chrome

- Phantom JS

- Firefox Headless Mode

- HtmlUnit

- ZombieJS

- Splash

Any of these browsers give testers and developers a realistic testing environment and enables users to scrape the web using browser automation tools and proxy servers for anonymity.

DIFFERENCES BETWEEN BROWSER AUTOMATION TOOLS

The browser automation tool you should choose depends on a number of factors, including your level of programming and technical skills. If you have advanced programming skills, or you can hire a seasoned programmer, Selenium is a very good choice. However, if you prefer a tool with a simple and easy to use interface, the other web scraping tools can work perfectly well. Novices, for instance, can start with the free Scraper browser extension and they can upgrade to other more advanced tools as they learn more about web scraper.

Budget, of course, should also be an important consideration. While most of the tools offer free trial periods, you may want to look into their paid versions as these plans offer more advanced features that are likely to be more useful for your organization’s needs.

Also Check : Web scraping for business.

Another factor to take into account is the ability to hide your identity and remain anonymous while scraping the web for data. While most web scraping automation tools are compatible with proxy servers, it is important to make sure that the proxy server you select and the web scraping tool you use can work together perfectly to avoid any issues. LimeProxies is compatible with most web scraping tool and can provide you not only with anonymity, but also with security since it has a feature called Anytime IP Refresh. With this advanced feature, your IP address can be changed anytime, either by demand or on a pre-set schedule. By using LimeProxies, you will lessen or even remove the chance of being blocked by the websites you crawl. GET STARTED FOR FREE

.

About the author

Rachael Chapman

A Complete Gamer and a Tech Geek. Brings out all her thoughts and Love in Writing Techie Blogs.

Related Articles

LIMEPROXIES GDPR COMPLIANCE

General Data Protection regulations has come into force from 25 May 2018 and applies to all businesses doing business in or with a person in the EU.

The Ultimate List of Free Stock Images

Try searching the internet about tips on how to make blog articles and social media posts interesting enough for reader engagement, and you will always see this tip: include images in your blog articles and social media posts. Whether you like it or not, images help drive traffic to your website.