QUICK LINKS:

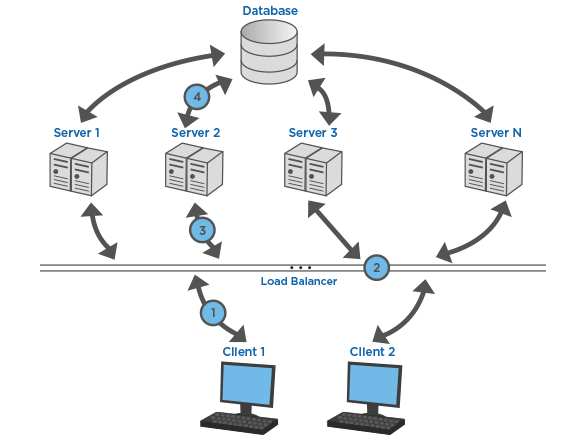

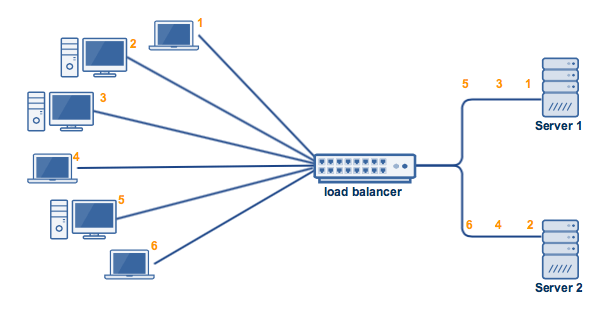

Balancing traffic on a website or on a network plays a very important role in protecting the server from crashing. Increase in traffic leads to increment in the pressure on the data servers. Overloading may result in the servers getting crashed. Traditional web applications had single servers that could not handle requests from clients efficiently. They were poor performers that could not handle data beyond a certain threshold. Overloading of the servers would result in crashing. In the modern world of internet, a solution has been created to the problem. It is now possible to have multiple servers that utilize load balancers that maintain network traffic at manageable levels. Load balancers receive all requests sent by clients and distribute it to the available servers while maintaining high-performance levels at all times.

HOW TO BALANCE TRAFFIC

Load Balancing

Traffic on a certain network can be balanced by even distribution of requests received from the clients among the servers in a cluster. Load balancing increases the availability of applications and resources needed by clients. It ensures that all requests made by clients are executed in the fastest time possible. Load balancers also increase the efficiency with which services are delivered to clients by ensuring no time is wasted due to the breakdown of any of the servers.

Network Load Balancing

Network load balancing can also be denoted by the terms multi-homing or dual WAN routing. It facilitates balancing of traffic between two links of Wide Area Network (WAN) without utilizing more complicated routing protocols such as Broad Gateway Protocol (BGP).

It provides load balance to network sessions such as email and web across several connections with the objective of spreading the bandwidth used by every Local Area Network user. It reduces the amount of load on each server and this leads to increase in the available overall bandwidth. The speed of processing the traffic increases unlike in a situation where it only flows through one server.

Network load balancing plays an important role in providing network redundancy which is crucial in ensuring accessibility to network even during the situation of link outages.

In a situation where one host breaks downs, Network Load Balancing distributes the traffic to the rest of the hosts.

Session balancing

It facilitates balancing between multiple sessions across several links in Wide Area Networks (WAN). Browsers will tend to open several sessions on the internet. Some of the sessions could be of images while others are of text. These sessions should be balanced to protect the servers from crashing. Traffic on File Transfers (FTP) should be balanced. This ensures that the load is well distributed across all connections thereby improving the throughput and overall performance.

Server Load Balancing

Several servers, when combined together, form a cluster of web servers. Traffic can be balanced by distributing all incoming requests to the cluster of web servers.

Server load balancing is a method through which servers effectively manage traffic especially if it is at high volume. It is also effective in preventing challenges in accessibility as well as a reduction in load times. One way through which application response time and throughput can be improved is by utilizing multiple servers. Multiple servers ensure proper distribution of traffic and network across several servers. This can be achieved through the following ways:

Server Load Balancer

Load balancers help in distributing workloads across many backend servers commonly referred to as server pool or server farm.

Many websites that handle high traffic deal with thousands of requests received from different clients and respond to these requests through accurate application data, text, video or images in the shortest time possible and in the most reliable manner. In order to manage the heavy traffic in a more cost-effective manner, most websites utilize multiple servers

A server load balancer plays a very important role of distributing all received request from clients across the servers that are capable of handling the incoming requests in the most effective way and in the highest speed possible. It balances the requests across all servers and ensures that none of them gets overworked hence prevent crashing of the servers. Server load balancer ensures that the servers maintain high performance throughout and just in case one server breaks down, the load balancer redirects the incoming request to the rest of the online servers. The load balancer also automatically sends requests to any newly added server to ensure that traffic is balanced across all servers.

The functions of a load balancer can be summarized as follows:

Use of Multi Layer Switching (MLS)

Multilayer switches are hardware network devices used in distribution of network traffic across several servers. This helps in improving performance especially in applications. Multi-Layer switching utilizes different OSI layers.

- Switching on OSI layer 2

- Switching in layer 3

OSI Layer 2 Switching

OSI layer 2 facilitates transfer of data between several nodes in a Local Area Network (LAN) or a Wide Area Network (WAN). Multilayer switches turn on the switches on OSI layer 2 enabling transfer of data in different networks.

TYPES OF LOAD BALANCERS

Basically there are two main types of load balancers.

Both hardware and software load balancers are involved in distribution of a network’s workload and ensuring availability of network resources to clients. It is important to note that both types utilize different scheduling algorithms as well as routing mechanisms

Hardware Load Balancing

Hardware load balancers are utilized in the layers 4 and 7 of OSI model to distribute data, creating balance in the number of requests received by each server.

They mainly utilize specialized processors that are provided by vendors for this kind of traffic balancing. In order to solve the problem of heavy traffic on your website, users have to purchase larger gadgets that can accommodate the specialized processors.

Disadvantages of Hardware Load Balancers

1. They are costly

The main disadvantage with hardware load balancers is that they are an expensive method of dealing with heavy traffic on websites.

2. They lack flexibility

They are also less flexible compared to software load balancers.

Software Load Balancing

This type works by distributing clients’ request to multiple servers and hence reduce the workload on servers. Consequently, this reduces the possibility of servers crashing.

There are two main classes of software load balancers. These include:

Installable software-based load balancer

It utilizes software that you can easily install in your gadgets to facilitate the process of data execution. Some of the software you can install on your hardware includes; Microsoft sharepoint, Microsoft Exchange, Radware, SAP, Cisco, Netscaler (Citrix), Alteon and Window Terminal Server.

Installable software-based load balancer should be installed, configured and managed in hardware of users.

Load Balancer as a Service (LBaaS)

Management of this kind of load balancers is provided by cloud service providers. The cloud providers further facilitate installation, management; provide fault tolerance as well as the elasticity needed by the load balancers.

Examples of load balancer as a service include Stratoscale Symphony and AWS ELB among others.

Advantages of Software Load Balancers

- They are simple and easy to set up.

The main advantage with software load balancers is that they are easy to configure and users can quickly set up their protocols.

- They offer a cheap solution for traffic balancing.

Software load balancers also provide more cost effective solutions of handling high traffic on websites. Users can access them at a much lower cost when compared to the hardware load balancer alternatives. Cloud providers provide a large number of load balancers at very affordable prices. There is a wide range of cloud providers that offer application testing especially for applications that are resource intensive.

- They have greater flexibility.

Software load balancers are more flexible. They are easy to adjust and not like hardware load balancers.

- They have the capacity to handle large volumes of network traffic.

Software loads balancers are able to deal with large amounts of requests received from clients at any given time. They have the capacity to handle thousands of incoming requests from different clients and distribute them to the available functional servers.

- Software Load Balancers are scalable

Their ability to handle large quantities of network traffic in a particular time can be increased with increase in demand. In hardware load balancers scalability is a challenge. They utilize x86 servers which are scalable while in hardware balancers that utilizes separated hardware, capacity cannot be scaled.

Users can utilize cloud environments such as AW ECS or even install the software on their most preferred hardware devices.

Software load balancers can further be categorized based on routing algorithms.

Load Balancing Algorithm

Load balancing algorithms are elements that control the distribution of all requests received from clients to the available and functional servers.

There are several load balancing algorithms. The method to use greatly depends on how complex or simple the task of load balancing is. The most commonly used methods of load balancing include the following;

- Round Robin

It is sometimes referred to as Next-in-Loop Algorithm. It is the most common and basic method used in load balancing. Using Round Robin, all requests received from the clients are distributed across all available servers in a sequential manner. This method has a higher preference among users mainly because it has no requirements for specialized hardware or software. It is therefore a more cost effective method of handling heavy traffic.

- Weighted Round Robin

Weighted Round Robin is similar to Round Robin Algorithm and the only difference is that some servers in Weighted Round Robin receiver a higher amount of the requests. The algorithm was developed to be used in Automatic Teller Machines (ATMs) and to utilize data packets of fixed sizes.

- Source IP Hash

In this kind of algorithms, the factor that determines to which server the requests should be sent is the IP address of origin. The load balancer uses the server and the client’s IP address to create a hash key with unique characteristics that help in allocating a client’s request to a server. Provided all servers are functional, requests from a particular IP address will always be directed to a particular web server. Whenever a session is broken, the unique key initially assigned to a particular server is used to direct the requests made by the client to the same server it utilized before the session was broken.

- URL Hash

URL Hash is much similar to source IP hash, the only difference is that in URL Hash, the requested URL has to be hashed. This algorithm plays a crucial role in balancing traffic especially before proxy cache since all requests for particular item are sent to a single backend cache. This helps in preventing duplication of the cache.

- Least Connections

This algorithm ensures the most recent requests are distributed to the server with the least client connections. This is done with consideration for the capacity of each server to process client requests. In situations where there is uniformity in the resources and server capacity, this method becomes more preferable for use as it gets rid of latency.

- Weighted Least Connections

This load algorithm works on the same principle as that of least connections algorithm. The only difference is that both the capacity and availability of server act as criteria for selection of servers.

- Fixed Weighted (Chained Failover)

In this technique, network traffic is shared among several servers collectively referred to as a cluster. The order in which traffic is distributed among servers in the clusters is predetermined and arranged in a chain. The most recent requests are first sent to server that appears first on the chain. When these servers are at maximum capacity and cannot handle more request, the rest are sent to the second server according to the order in the chain. When the second server is full, the requests are sent to the next server and the sequence continues.

- Least Pending Requests

Least pending requests Algorithms happen to be the standard used in the industry today. It utilizes real-time monitoring to determine the server that receives the incoming client request. The server with the least number of active sessions receives the most recent client request. To use this algorithm in balancing load traffic, virtual server has to be assigned in layer 7 as well as TCP profile. This algorithm has a higher preference among user mainly because it indicates the quantity of load that each connection delivers at any given time. Response time is used as a determining factor for the server that receives the next task for execution. This plays an important role in increasing efficiency in the system. The heavily loaded servers are not assigned new tasks as well as the servers with slow response time.

- Weighted Response Time

In this method, the health check of a server provides information about server which is providing the fastest response to clients request at any given time. The fastest server is normally allocated the next client request for execution.

SOFTWARE DEFINED NETWORKING (SDN) ADAPTIVE

SDN adaptive utilizes information from layers 4 and 7 as well as layers 2 and 3 in order to arrive at a decision about which server to assign a given task or client request. The decision about the server that is to be allocated a task is based on the information obtained from the mentioned layers which include data on the health status of the network resources, status of network congestion, state of the network applications as well as the state of web servers. .

SERVER GROUP CONFIGURATION

In the dynamic environment of computer applications, there is need for servers to be removed or added regularly. This is made possible by certain environments, the most common being ECS2 which allows its users to only purchase what they require for their use. This also ensures that the capacity to process requests received from clients increases with increase in traffic or demand. The advantage of utilizing environments such as AW ECS2 is that they enable load balancers to easily remove non functional servers or even add more servers to the system without interfering with the functionality of the existing connections.

TECHNIQUES USED IN LOAD BALANCING

Different techniques are applied today in order to distribute traffic among servers in a given network. These techniques help in protecting servers from crashing as a result of excessively heavy traffic or overloading. Such technologies are also applied to improve the throughput, efficiency as well as the overall performance of websites

The techniques of load balancing include the following:

- Network load balancing

- Routed load balancing

- Bridged Load Balancing

- DNS load balancing

- Agent Based Adaptive Load Balancing

1. Agent Based Adaptive Load Balancing

In this technique of load balancing, traffic is distributed to multiple serves categorized as clusters. All servers in the cluster have corresponding agent that communicates to the load balancer to report the amount of its current load. Using this information, the load balancer decides to which server it should send the requests received from clients. The Agent based adaptive load balancing is used together with other techniques of load balancing to handle traffic and prevent crashing.

2. Network Load Balancing

This technique utilizes a large number of servers ranging from two to thirty two servers, each having a unique IP address as well as computer identity.

3. Routed Load Balancing

This involves balancing the load at layer 3. At this layer, the IP address of the virtual servers only exists in a single network while the IP address of real servers exists on a single or multiple other networks.

4. Bridged Load Balancing

Bridged load balancing involves use of IP address of virtual server created on a network which is same as the one of the real server.

5. Domain Name System (DNS) Load Balancing

This technique involves configuration of a domain in DNS. All requests sent by clients are well distributed across an array of servers in the system.

PERFORMANCE TRAFFIC ROUTING

This is a method used to reduce data traffic by locating the client request and distributing the request to the nearest endpoint. Tasks are sent to endpoints which geographically are in close proximity.

SESSION PERSISTENCE

The term session persistence is used in the domain of Information and Technology to refer a procedure of sending requests received from a client to an application server or a backend web for a certain period of time. A session refers to time needed for a task to be executed. Organizations or companies that operate on high traffic from its enormous client base handle large quantities of requests that have to be processed in the fastest time possible. In such organizations, the servers are grouped to create server farms. To balance the traffic in the server farm, load balancers are used.

CONCLUSION

Websites can improve the efficiency, throughput and general performance by simply installing load balancers. Load balancers protect servers from crashing as a result of overloading. When network traffic is well distributed among host servers, the traffic flow is faster and greater throughput is achieved. It is necessary for companies or individual owners of websites to install load balancers that are easy to use.

Post Quick Links

Jump straight to the section of the post you want to read:

About the author

Rachael Chapman

A Complete Gamer and a Tech Geek. Brings out all her thoughts and Love in Writing Techie Blogs.

Related Articles

Best Live Chat Software 2020

An investment in this solution can help your business better. Let’s understand how the best live chat software can help your business soar higher in 2020.

Planning a Project on Web Scraping

There is a way to conduct your projects online without getting blocked. Know how Planning Project Web Scraping can be done with Limeproxies