Web scraping involves the extraction of information from a website with or without consent from the website owner. Although scraping can be done manually, it is most times done automatically because of the efficiency of the latter. Most web scraping is done with malicious intent, but no matter the purpose for which it is intended, there are several web scraping techniques employed.

Among new and growing businesses, web scraping has become a familiar term, especially due to the idea that harvesting large data is necessary to stay in the market. Not all companies have what it takes to extract data through web scraping and so they outsource the services to a reputable agency. They do this because the internet contains unstructured data that cannot be used in the format in which they exist. As a developer who is interested in web scraping, we have compiled useful technologies that will help you master scraping.

As a company, it is better to outsource web scraping as it will allow you more time to perform more productive tasks. But there is hardly anything as satisfying as getting your data yourself and if that is your case, here are 5 technologies you need to get familiar with in order to scrape the web.

Post Quick Links

Jump straight to the section of the post you want to read:

MANUAL SCRAPING

1 . COPY-PASTING

In manual scraping, what you do is copy and paste web content. This is time-consuming and repetitive and begs for a more effective means of web scraping. It is however very effective because a website’s defences are targeted at automated scraping and not manual scraping techniques. Even with this benefit, manual scraping is hardly being done because it is time-consuming while automated scraping is quicker and cheaper.

AUTOMATED SCRAPING

1 . HTML PARSING

HTML parsing is done with JavaScript and targets linear or nested HTML pages. It is a fast and robust method that is used for text extraction, screen scraping, and resource extraction among others.

2. DOM PARSING

DOM is short for Document Object Model and it defines the style structure and content of XML files. Scrapers make use of DOM parsers to get an in-depth view of a web page’s structure. They can also use a DOM parser to get nodes containing information and then use a tool like XPath to scrape web pages. Internet Explorer or Firefox browsers can be embedded to extract the entire web page or just parts of it.

3. VERTICAL AGGREGATION

Vertical aggregation platforms are created by companies with access to large scale computing power to target specific verticals. Some companies run the platforms on the cloud. Bots creation and monitoring for specific verticals are done by these platforms without any human intervention. The quality of the bots is measured based on the quality of data they extract since they are created based on the knowledge base for the specific vertical.

4. XPATH

XML Path Language is a query language that is used with XML documents. XPath can be used to navigate XML documents because of their tree-like structure by selecting nodes based on different parameters. XPath can be used together with DOM parsing to scrape an entire web page.

5 .GOOGLE SHEETS

Google sheets are a web scraping tool that is quite popular among web scrapers. From within sheets, a scraper can make use of IMPORT XML (,) function to scrape as much data as is needed from websites. This method is only useful when specific data or patterns are required from a website. You can also use this command to check if your website is secure from scraping.

6. TEXT PATTERN MATCHING

This is a matching technique that involves the use of the UNIX grep command and is used with popular programming languages like Perl or Python.

Web scraping technique involves the use of tools and services that can be easily gotten online. In order to be proficient in web scraping, you need to know all the techniques, or you can give the task to a freelancer to do it for you. Automated web scraping tools and services include limeproxies, cURL, Wget, HTTrack, Import.io, Node.js, and a list of others. For scraping purposes, scrapers usually make use of headless browsers like Phantom.js, Slimmer.js, and Casper.js.

Other web scraping technologies to master are:

1 . SELENIUM

Selenium is a web browser automation tool that allows you to do a lot of preset things as with the use of a bot. learning to use Selenium will go a long way in helping you find out how websites work. Using it can mimic a normal human visit to a page using a regular web browser and this will allow you to get accurate data. Often times, it is used in emulating ajax calls in web scraping too.

Being a powerful automation tool, it can give you more than just the capacity to perform web scraping. You can also test websites and automate any time-consuming action on the web.

2. BOILERPIPE

When you have to extract text together with the associated titles, Boilerpipe will come in handy. Boilerpipe is a java library that is made for data extraction from the web be it structured or unstructured data. It takes care of the unwanted HTML tags and other noise found in web pages leaving you with clean text. An endearing feature of this technology is that it does web scraping rapidly with little input from the user. The speed does not affect the accuracy of data as it has high accuracy and all these make it one of the easiest scraping tools available.

3. NUTCH

When the topic of a gold standard web scraping technology is mentioned, Nutch is one of the top options that will be presented. It is an open-source web crawler that extracts data from web pages at lightning speed. Nutch crawls, extracts and stores data once it has been programmed. Its powerful algorithm is what makes it stand out as one of the best web scraping tools available.

In order to scrap with Nutch, the web pages have to be coded into Nutch manually. Once this has been done it will scan through the pages and fetch the required data and store it in the server.

4 . WATIR

Watir (which is pronounced as water) is an open-source Ruby library family that is a good choice for web browser automation as it is easy to use and is very flexible. One of the reasons why it is perfect for use in web scraping is because it interacts with web browsers the same way humans do.

It can be used to click links, filling forms, pressing buttons, and anything you can imagine a human doing on a web page. Ruby makes the use of Watir very enjoyable and easy as Ruby just like other programming languages gives you the ability to read data files, export XML, connect to databases, and write spreadsheets.

5 .CELERITY

This is a JRuby wrapper created around HtmlUnit (a headless java browser with support for JavaScript). Its API is easy to use and allows you to navigate easily through web applications. It runs at a very impressive speed as there are no time-consuming GUI rendering or unnecessary downloads involved. Since it is scalable and non-intrusive, it can run in the background silently after it has been set up initially. Celerity is a good browser automation tool that you can use for effective web scraping.

Anti-Scraping Techniques and How They Can Be Avoided

1 . IP

IP tracking is one the easiest ways a website detects web scraping activities as it could identify whether the IP is robot-based or human based on the behaviour. An IP address stands to be blocked when a website receives a lot of requests within a short period from it as this behaviour is not natural. For a website to have an anti-scraping crawler, the number and frequency of visits per unit time are taken into consideration and here are some of the scenarios you may encounter while scraping:

Scenario 1: there is no way a human can make multiple visits to a website within seconds. So while scraping, if your crawler sends frequent requests to a website, the website will block your IP and tag it as a robot.

Solution: reduce scraping speed. Set up a delay time between two steps will always work to prevent your IP from being detected as a robot.

Scenario 2: visiting a website at the exact same pace and pattern is not human. Some websites monitor the frequency of requests and analyze the patterns in which the requests are sent. If they occur with the same pattern as once per second, it is likely that the anti-scraping mechanism will be activated and block you out.

Solution: when setting a delay time between each step, choose random times. With a random scraping speed, your crawler will be more human-like.

Scenario 3: some websites make use of high-level anti-scraping techniques that use complex algorithms to track and analyze the requests from different IPs. If the request from an IP is unusual like being repetitive and predictable, it will be blocked.

Solution: change your IP periodically. Most proxy services like limeproxies provide IPs which you can rotate. If requests are sent through these rotated IP addresses that make your crawler behave less like a bot and more human. With this kind of unpredictable behaviour, the chances of your IP being blocked is reduced.

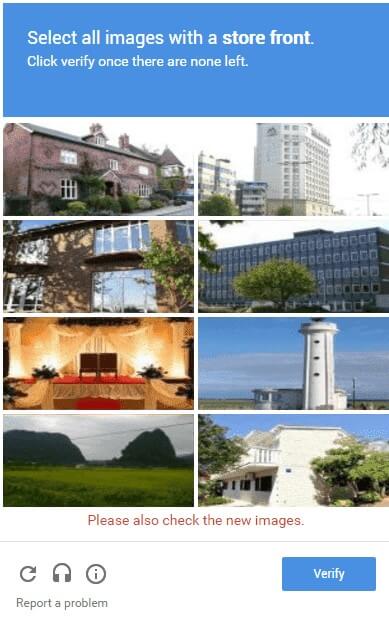

2. CAPTCHA

When browsing a website, you must have come across these images:

Need a click?

Need to select specific pictures?

Need to type in/select the right string?

These images are called captcha (Completely Automated Public Turing Test to Tell Computers and Humans Apart). It is an automatic program for the public that is used to determine whether the user is human or a robot. It provides various challenges to the user such as degraded image, equations, fills in the blanks and so on which are said to be solved by only a human.

Captcha tests have been constantly evolving and many websites use it as an anti-scraping technique. It was once difficult to pass captcha directly but now with the use of some open-source tools, captcha problems can be solved during scraping. This will, of course, require more advanced programming skills and some people go as far as building feature libraries and creating image recognition techniques with machine learning or deep learning skills to pass captcha checks.

It is, however, easier to avoid triggering the captcha test than to solve it. Adjusting the request delay time and using rotating IPs are effective ways of reducing the occurrence of captcha tests.

3. LOG IN

Social media platforms and many other websites will only allow you access to the information after you log in and so in order to crawl such sites, the crawlers would need to learn how to log in.

After logging in, the crawler has to save the cookies. A cookie is a small piece of data that is stored by the website for users so that the website would remember you and not ask you to log in again. Some websites that use strict scraping mechanisms will still allow you access to partial information even after log in such as 1000 lines of data every day.

Your Scraping Bot Needs To Know How to Log In

1 . Simulate keyboard and mouse operations. The crawler bot should be able to mimic the login process like clicking on the text box and login buttons using the mouse, or typing in the account login information using the keyboard.

- Save cookies after the first login. some websites allow cookies and this will allow the website to remember users. With saved cookies, you do not need to log in again in a short while. Thanks to this your crawler will not have to go through the login process every time and be able to scrap as much data as you need.

4 . UA

UA stands for a user agent and is a header for the website to know how the user visits. It contains information of the user like the operating system, version, CPU type, browser, browser version, browser language, a browser plug-in, etc.

An example of a UA is: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11

If your crawler has no header as you scrap, it will only be identified as a script. Requests from scripts do get blocked and so to avoid this, the crawler has to pretend it is a browser with a UA header so that it can have access.

Sometimes websites provide different information from the same page to different browsers or different versions even if you visit with the same URL. This is because the information that is compatible with a browser is what you see and the rest are blocked. So to make sure you get what you need, multiple browsers and versions would be necessary.

Change UA information until you find the right one that shows you all the information you need. Your access may be blocked by some sensitive websites if you keep using the same UA for a long time so to avoid this, you will need to change the UA information from time to time.

5 . AJAX

More websites are now being developed using AJAX instead of traditional web development techniques. AJAX stands for Asynchronous JavaScript and XML and it is a technique that updates the website asynchronously. This means that the whole website doesn’t need to reload when small changes take place inside the page.

For websites without AJAX, the page will be refreshed even if you make only a small change. When scraping such websites, you will find out how the pattern of the URL changes with each reloads. Generating the URLs in batches and directly extracting the information is easier rather than teaching your crawler to navigate through websites like humans.

For a website with AJAX, only the place you click will change and not the whole page and so your crawler will deal with it in a straightforward way. The use of a browser with built-in JS operations will automatically decrypt an AJAX website and extract the data you need.

About the author

Rachael Chapman

A Complete Gamer and a Tech Geek. Brings out all her thoughts and Love in Writing Techie Blogs.

Related Articles

Top 10 price comparison solutions for 2020

Price comparison refers to the process of comparing the prices of a product or solution. Here are the top 10 price comparison solutions 2020

Proxy Networks: The Retailing Game Changer of 2020

Proxy networks will help you stand at an advantage in this industry as it is one industry that is data-dependent.