Before I dabble on about the significance of web scraping in content curation in particular and content strategy in general, let me first define content curation. Content curation is when you pick out the best and most applicable content on the web and present them to your readers in an organized manner, based on a specific topic. It is a great addition to any content marketing strategy especially on days when creating your own content from scratch seems like a daunting process or when you are running out of ideas.

Content strategy involves planning out your content week by week, and there are just times when you stare at the blank screen for minutes and nothing will just come up. Content curation helps stretch out your ideas. For example, if you publish content three times a week, you can have two original write-ups and one curated content each week. Your content ideas will not be used up as quickly.

Curata, a provider of content curation software, recommends the following mix in your content marketing strategy:

- Original content - 65%

- Curated content - 25%

- Syndicated content - 10%

Original content are those that you or a hired writer creates from scratch, while syndicated content is republishing another website’s content on your blog with permission and reference to the original publisher.

Your content ideas can, therefore, be spread out, so you will not run out of ideas quite easily. As a result, you can adhere to your commitment to publishing content regularly. The demand for content can be overwhelming at times, but content curation can help ease the problem.

CONTENT CURATION VS CONTENT AGGREGATION

Content curation is often confused with content syndication and even content aggregation. I’ve already described content syndication above, while content aggregation is a different story. Content aggregation is simply the act of grouping content together based on topic, and presenting them to the readers as is, with no additional input or commentary.

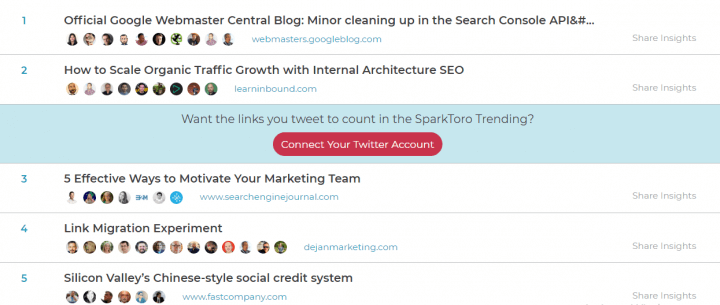

An example of content aggregation is Sparktoro’s Trending page which they dubbed as “The Front Page of the Web Marketing World.” The page presents the latest news, hot topics, and trending tweets in the marketing industry in one list.

This picture shows how Sparktoro listed the top news and blogs in the marketing world using content aggregation.

When you think about it, aggregation can be a part of content curation.If you want to curate content based on the aggregated content, you can simply browse through the list of content and select the ones you want to feature.

While Sparktoro’s aggregated content which we cited above is designed for readers, there are other ways to extract news stories in a particular topic that better suits marketers or business owners.

Web scraping tools can help you gather not only the relevant articles but also the author, publication date, and the links in a comprehensive format. Like any automation tools, web scraping tools work best with proxy servers. (More on this later.)

EXAMPLES OF CONTENT CURATION

It is clear that content aggregation is not content curation. The purpose of content aggregation is to list relevant content, but the goal of content curation is to add value to the readers. Therefore, aside from listing the articles, it also tells the readers why the articles are relevant. Perhaps the best way to explain content curation is by providing some examples, so here are some good ones.

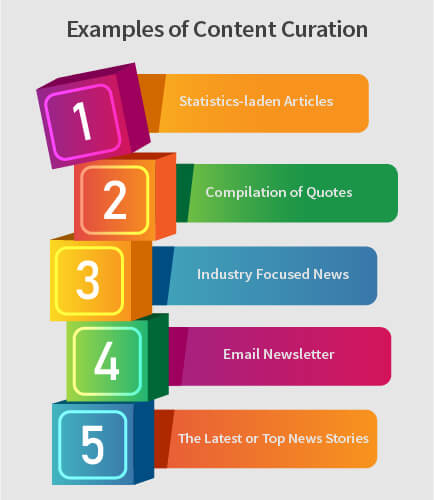

1 . STATISTICS-LADEN ARTICLES

Content curation becomes the main strategy in websites that gives high value and focus on research and statistics. Hootsuite is a perfect example of this. They have several statistic-laden posts and one of them is their article on Facebook statistics for marketers.

This picture shows Hootsuite’s article on Facebook statistics for marketers where they employed content curation.

In the post, Hootsuite combined 41 statistics from different websites which are all related to Facebook. For every statistic, they added commentaries consisting of 50 to 100 words.

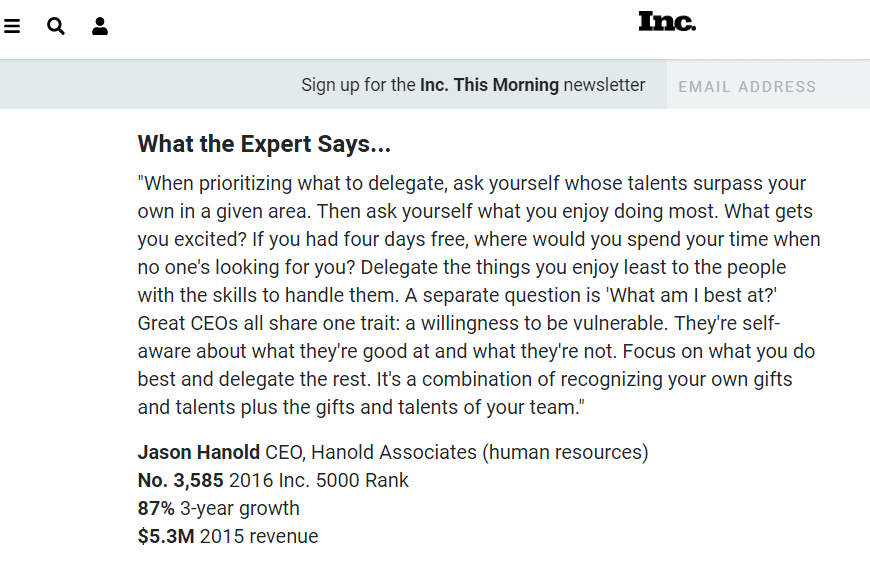

2. COMPILATION OF QUOTES

Another example of content curation is when the publisher searches for statements made by experts on a particular subject matter, collates these statements into one original content and publishes the content on their website.

Take for example INC.com’s post about the one task that CEOs will never delegate as shown in the picture below:

INC. took a topic (delegation) and searched for the statements of experts and CEOs about the particular topic and as a result, they created a perfectly curated content.

3. INDUSTRY FOCUSED NEWS

There are also websites that specialize in pooling news stories related to a specific industry and presenting it to their readers together with a snippet or a short commentary. That is what Slashdot is doing. Their editors gather news stories that are related to technology and write some introductory piece about the news to tell readers why the story is important.

This picture shows the home page of Slashdot which is a curation of technology-related news stories from different websites.

4. EMAIL NEWSLETTER

Content curation can also go beyond blog posts, as what Launch Ticker had done. They compile top news stories in the tech industry, summarize each article into 300 words for better readability, and send this compilation in an email. The reader can click on the link so they can read the full story of an article they are interested in.

Launch Ticker even went as far as monetizing content curation since you need to sign up in order to receive their daily curated newsletter.

5. THE LATEST OR TOP NEWS STORIES

Articles that tell the top news stories for the day or the week is very common and that is an example of content curation. They can come with a range of titles such as, “What You Need To Know Today,” or “The Week at a Glance.” Whatever they are labelled, the readers will immediately know that they will be browsing through trending stories that happened or are happening.

Take a look at the Week’s Five Things to Know to see a perfect curation of latest news stories:

This picture shows how The Week has curated the latest and most trending stories.

WEB SCRAPING VS MANUAL RESEARCH

To come up with perfectly curated content, you need to gather the top stories, news articles, and social media posts about the topic you have in mind. In short, you have to do some research.

1. MANUAL RESEARCH

You can do the research manually, which means you Google the topic, go through all the search results, and select the ones that best fit what you have in mind. With this method, you need to sift through a lot of problems like information noise, ads, and the huge number of search results.

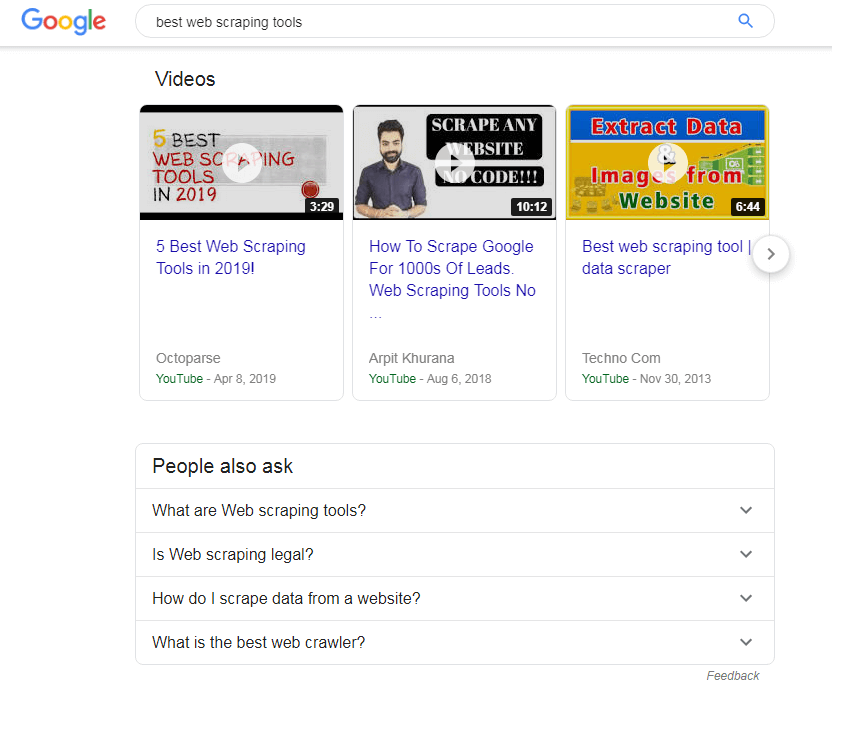

Let’s say you want to curate content about the best web scraping tools used by marketers. You search on Google using the keyword web scraping tools and look at the first page of the SERP. You will notice these things:

Laden with ads. The first page of SERP is not as reliable as it was in the past. Instead of organic results, you will see that it is mostly occupied by ads.

This picture shows how the first page of the search engine results contains an ad or two.

These web scraping tools that are advertised may not be the best tools used by marketers, still, they appear on the first page because they are sponsored content. But this doesn’t mean that they should be included in your curated content.

Information noise. There is a lot of unfiltered information on the search result which is ultimately useless for you. You have to spend time filtering and sifting through all the information noise.

This image shows the information noise you will be getting when you manually research for your content curation.

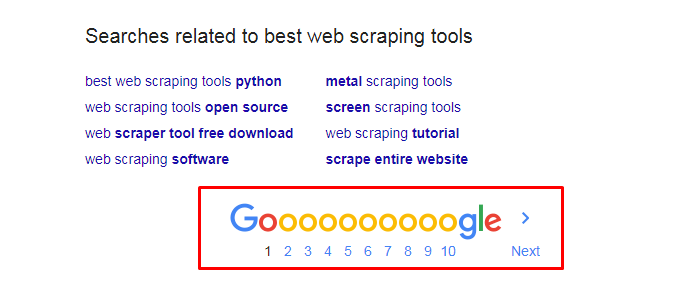

The Volume of data presented. When people search for a topic on Google or any search engine, nobody usually goes beyond the first page. But since you are curating content and looking for the best story and the first page is not that helpful, you would need to go through each page of the SERP to be able to get what you truly need.

Imagine going through each and every page of the SERP:

This picture shows the exhaustive number of pages in a search result.

After your research, you may very well end up tired at best, but confused and back at ground zero in the worst-case scenario.

2. WEB SCRAPING

Web scraping entails that you automate the research process with the use of tools. As I mentioned near the beginning of this article, there are web scraping tools that can help you gather all the articles relevant to the topic, as well as the author, publish date, and all the URLs in one comprehensive report.

Instead of spending hours, or even days doing manual research, web scraping tools can deliver the information you want in a matter of minutes. They also allow you to see how the articles are doing in terms of a number of views, and shares, so you will immediately know whether the article is popular or not.

This feature is very important since you would only want articles that are faring well to be included in your curated content. Who would want to put badly performing articles on their curated content?

The ability to see the number of views and shares is also an added point for web scraping as opposed to doing manual research. Being on the first page of the search engine results does not necessarily mean that the content is popular, as it only signifies that the content has passed Google’s algorithm with flying colours.

Web scraping is less tedious, more accurate, and a faster way to aggregate content which will be the basis of your content curation.

WEB SCRAPING TIPS AND TRICKS

Now that we have established that web scraping is the best method to assist you with content curation, compared to doing manual research, you might want to know some web scraping techniques that will help you stay afloat.

Keep in mind that when using web scraping tools or any automation tools for that matter, you should avoid getting blocked. You have to learn how to scrape the web without getting blocked or misled. Web scraping has only two enemies: Getting blocked and obtaining misleading information. But there is no need to worry as there are a lot of ways to get around these issues.

Scrape the web behind proxies designed for market researchers. Web scraping for content curation is a type of market research in away. You are gathering data that are needed for you to get ahead in the market, it just so happens that the data you’re gathering are articles, news stories, videos, and other types of content. Just the same, you are in danger of getting blocked if the websites you crawl notice that they are automation bots.

They can easily detect bots too. All they have to do is look at the IP address and the manner by which the requests are being sent. If the web scraping tools are sending hundreds of requests every minute, it’s obvious that the requests are not from a human being. They will block your IP address at once.

However, if you employ proxy servers, you can assign each bot with different IP addresses so the requests will appear to come from different users.

Using web scraping proxies are the best, fail-proof method to avoid getting blocked. But you can also do the other methods on this list.

Spoof user-agent headers. Automation bots identify themselves through user agent headers. Websites can also detect that the multiple requests are coming from a bot by looking at the user-agent header. What you can do is list down different user-agents and randomly assign them to each request.

Change your crawling pattern. Human behaviour is random and unpredictable, so as their search and request patterns. The behaviour of a bot, on the other hand, is the opposite so they are pretty obvious. Bots are programmed so they execute their functions in the same manner and everything follows the same exact pattern.

When websites detect a bot-like behaviour, they will automatically block your access. Changing the crawling pattern is, therefore, the logical thing to do to avoid detection. There are a lot of things you can do — from using cursor movements to accessing random web pages and links.

Web scraping is the best method to help with your content curation. It enables you to pool relevant content in an automated, fast, and more accurate way compared to doing it manually. All methods are not without issues though, and when it comes to web scraping tools, there is a chance for you to get blocked. Using the tips above will help you scrape the web more effectively and keep you from getting blocked from websites you are crawling.

HOW OFTEN SHOULD YOU CURATE CONTENT?

At this point, you already know the importance of content curation and how web scraping helps in making the process faster, easier, and cost-effective. You also need to know how often you should curate since it is not advisable for all your content to be the curated kind. Original content is still necessary, and the bulk of your content strategy should focus on that.

As mentioned earlier on this blog, Curata recommends that 65% of your content be original articles, and curated content should make up 25% of the total content. The remaining 10% can be syndicated content although this depends on your brand positioning.

Let’s focus on the 25% of your content. How often should websites curate content from other websites? Curata asked the same question to more than 500 of its global clients and found out the following:

- 16% curate content on a daily basis

- 13% curate content three days a week

- 19% curate content once a week

- 17% curate content monthly

- Over 20% curate content quarterly or less frequently

- 12% never do content curation

As you can see, 48% of responders say that they curate content weekly or more frequently. If a huge percentage of brands and businesses is doing it regularly, then that only means it’s effective. So the answer to the question is to try curating content at least once a week. This way, you can give your original ideas rest for a while each week.

SOME THINGS TO REMEMBER WHEN CURATING

Content curation is a great boost in your content marketing strategy, and even to your overall SEO strategy since it allows you to link to authorized websites. Do it with a web scraper and proxies, and you’re sure to get all the relevant data in a timely manner. Do it weekly to help spread out your content ideas a bit.

Aside from these two tips, here are some other things you have to remember when curating content:

Add value: Remember that the goal of content curation is to add value. Don’t just list the content you’ve gathered from around the web, but provide some snippets to allow readers to take a glimpse. It’s even better if you write a short commentary or summary of the content to show readers that you have truly filtered your list and every content is handpicked, relevant, and important.

Think about your reader: Even when the content is not from your own website, it’s still important to give it a voice that is consistent with yours. You still have to make sure that the summary or commentary is targeted to your reader, and not done haphazardly for the sake of putting it out there real quick.

Get content from different sources: Picking the same sources again and again in your curated content will look like they are sponsored. Don’t make your readers think that they are paid advertisements and make sure that you pick different sources. While blogs are the most common sources, you can also pick trade publications, YouTube or other video streaming platforms, news media outlets, and social media posts. The key is to make sure that the source is authoritative.

Keep it simple and organized: Sorting your content into categories makes it easier for your readers to access them. They can also understand your website better if you have categories. Don’t forget to include the categories in your metadata so they become visible to search engines. This way, your SEO strategy is not left out.

**Cross-promote your curated content: **Promoting your content on social media, email newsletters, and other digital channels should not be limited to your curated content. You should promote all of your content to different platforms so it can reach a wider audience.

THE BOTTOM LINE

To recall, you learned what content curation is and how it differs from content syndication and content aggregation. You also learned that web scraping is the best way to gather content from around the web and that manual research is tedious, time-consuming, and not practical at all.

Try to check also this web scraping guide and web scraping for business for more learnings.

We also established that most brands and businesses curate weekly at the very least, so try to do it at that frequency also. Most importantly, remember that the purpose of content curation is not only to fill in the demand for content, but also to add value to your readers.

When done the right way, content curation can boost your website’s popularity, increase the number of visitors and engagement, and ultimately, it can generate leads for your business. Like a curator at an art gallery, you can have fun curating but do so in a meticulous way and with utmost seriousness.

An added bonus is that you will learn a lot more about the industry you’re in when you curate content since you become exposed to relevant content from different websites, some of which are industry leaders.

Don’t forget to use proxies when scraping the web or to follow the other tips when scraping the web that I listed above. This way, you will avoid getting banned by websites who are always on the lookout for any automation tools.

Limeproxies offer both private and premium proxies that can protect you while scraping the web. With features that are unmatched by other providers such as virgin proxies and dedicated IP addresses for any service plans, Limeproxies can help you with your content curation.

Post Quick Links

Jump straight to the section of the post you want to read:

About the author

Rachael Chapman

A Complete Gamer and a Tech Geek. Brings out all her thoughts and Love in Writing Techie Blogs.

Related Articles

How to track Youtube Rankings?

You can double your lead count right now with just one simple method. Get rid of low Youtube video rankings and track Youtube Rankings by Limeproxies

What is Alternative Data?

Alternative data is data that is used to capture valuable insights for investors to make better financial decisions. Know what is Alternative Data